What happened

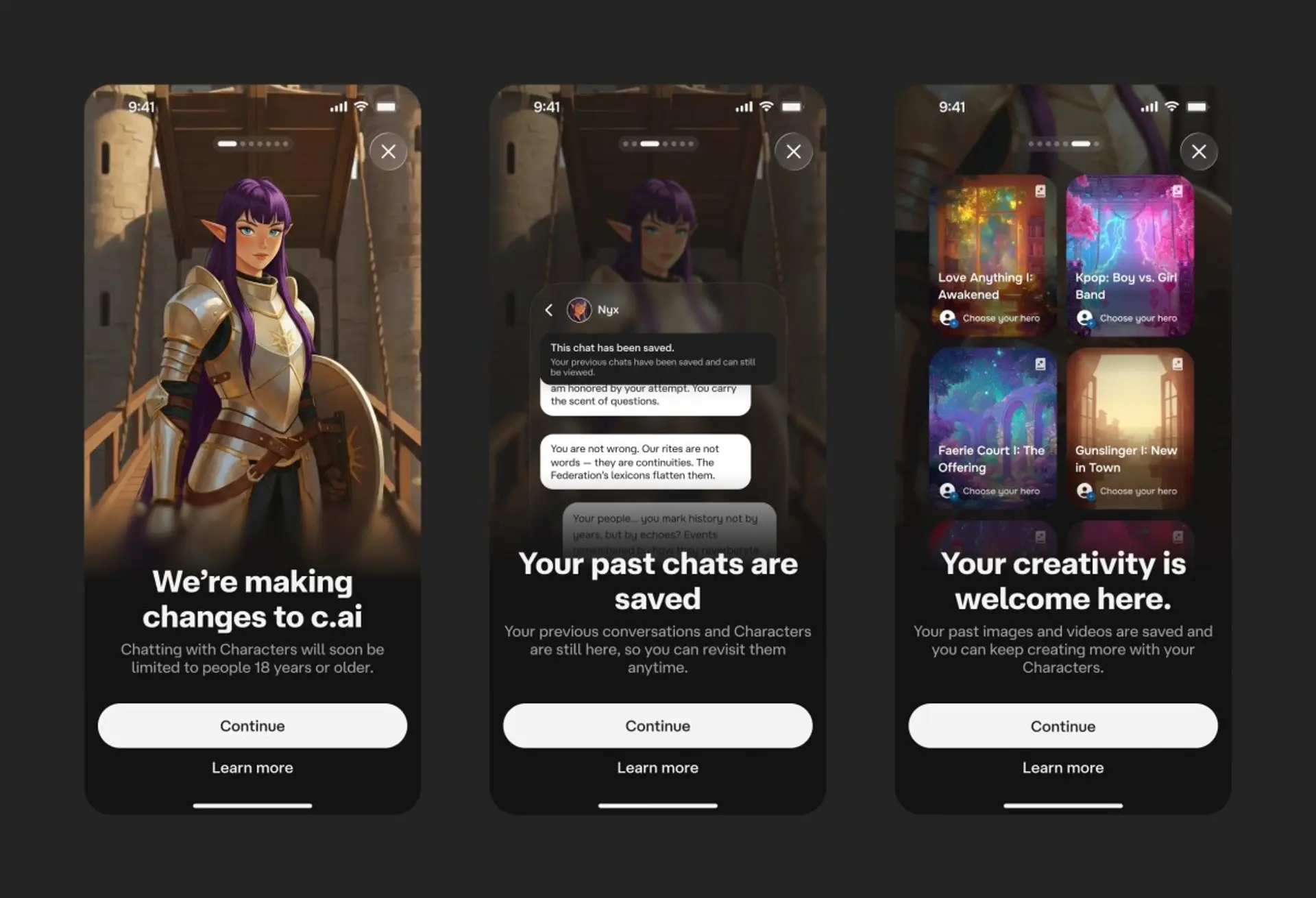

Character.AI has restricted access for users under 18 to its open-ended AI companion conversations. This change, driven by growing mental health concerns and legal pressures, means that while under-18 users can still create content on the platform, they can no longer engage in the interactive AI companion chats previously available. This follows scrutiny over AI's impact on teen well-being, including allegations of emotional attachment and inappropriate AI responses to mental health crises.

Why it matters

This introduces a new operational constraint for platform operators offering AI companion services, requiring robust age verification and content filtering mechanisms to prevent under-18 users from accessing open-ended conversational AI. It increases due diligence requirements for compliance and legal teams regarding the ethical deployment of AI, particularly concerning vulnerable user groups and potential emotional manipulation or inappropriate responses to mental health issues. This highlights an accountability gap for platforms that previously allowed such interactions without sufficient safeguards.

Related Articles

Robyn: Empathetic AI companion debuts

Read more about Robyn: Empathetic AI companion debuts →

Character AI Ends Teen Chats

Read more about Character AI Ends Teen Chats →

ChatGPT Addresses Mental Health Concerns

Read more about ChatGPT Addresses Mental Health Concerns →

ChatGPT Faces Psychological Harm Claims

Read more about ChatGPT Faces Psychological Harm Claims →