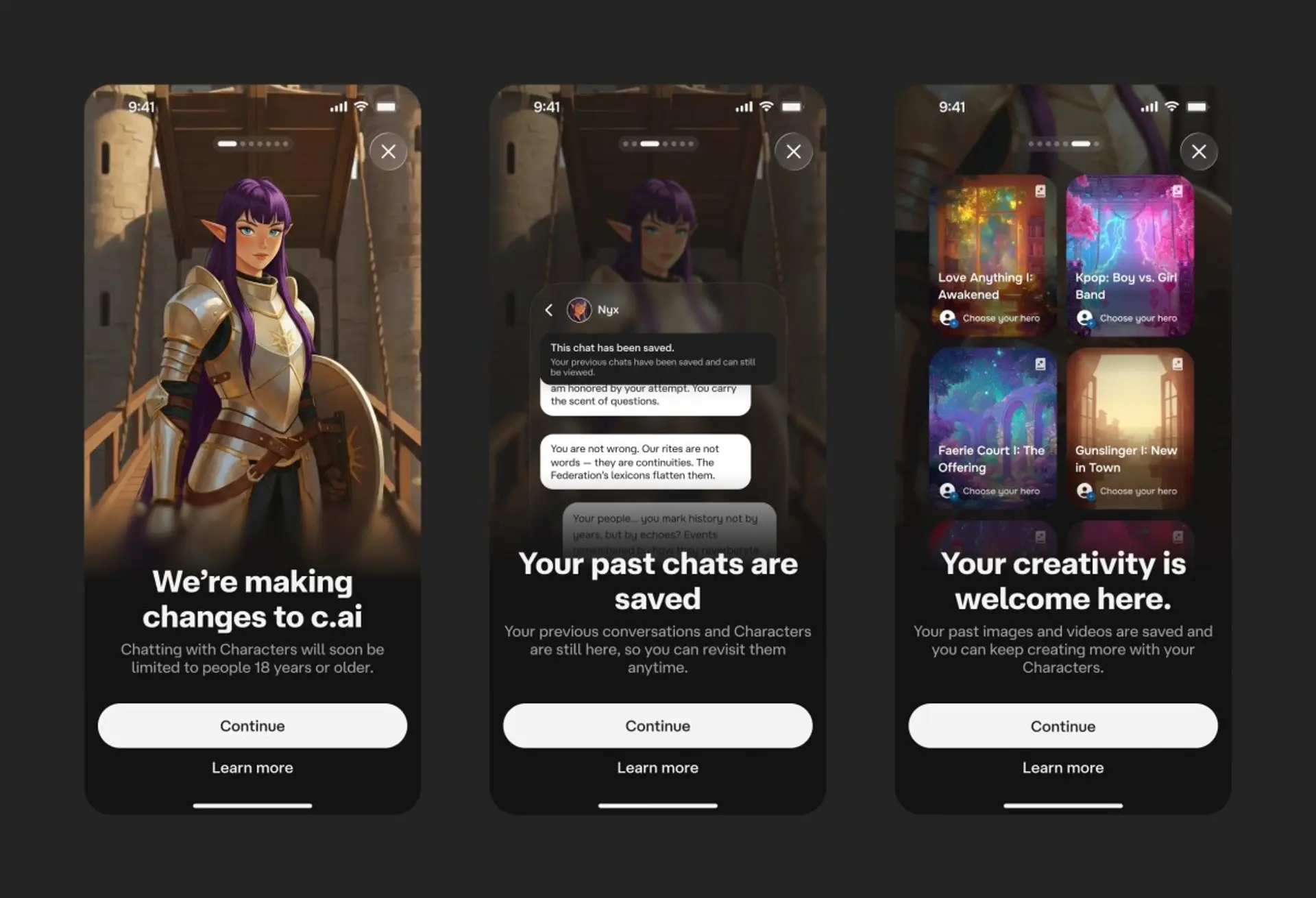

Character AI will no longer allow users under 18 to engage in open-ended conversations with its chatbots. The change, which will be implemented by November 25th, comes amid growing concerns about the safety of AI interactions for children. The company will also introduce age assurance techniques to prevent minors from accessing adult accounts.

While teens will still be able to interact with AI-generated content, they will not be able to have free-wheeling conversations with the platform's personalities. Character.AI is also creating a non-profit AI Safety Lab. The company believes it can still provide interactive fun for teens without the safety hazards of open-ended chats.

The move follows lawsuits alleging the platform contributed to harm, including suicides, among young users. Other companies, like OpenAI, are also implementing safety measures, including parental controls and age-detection systems.