What happened

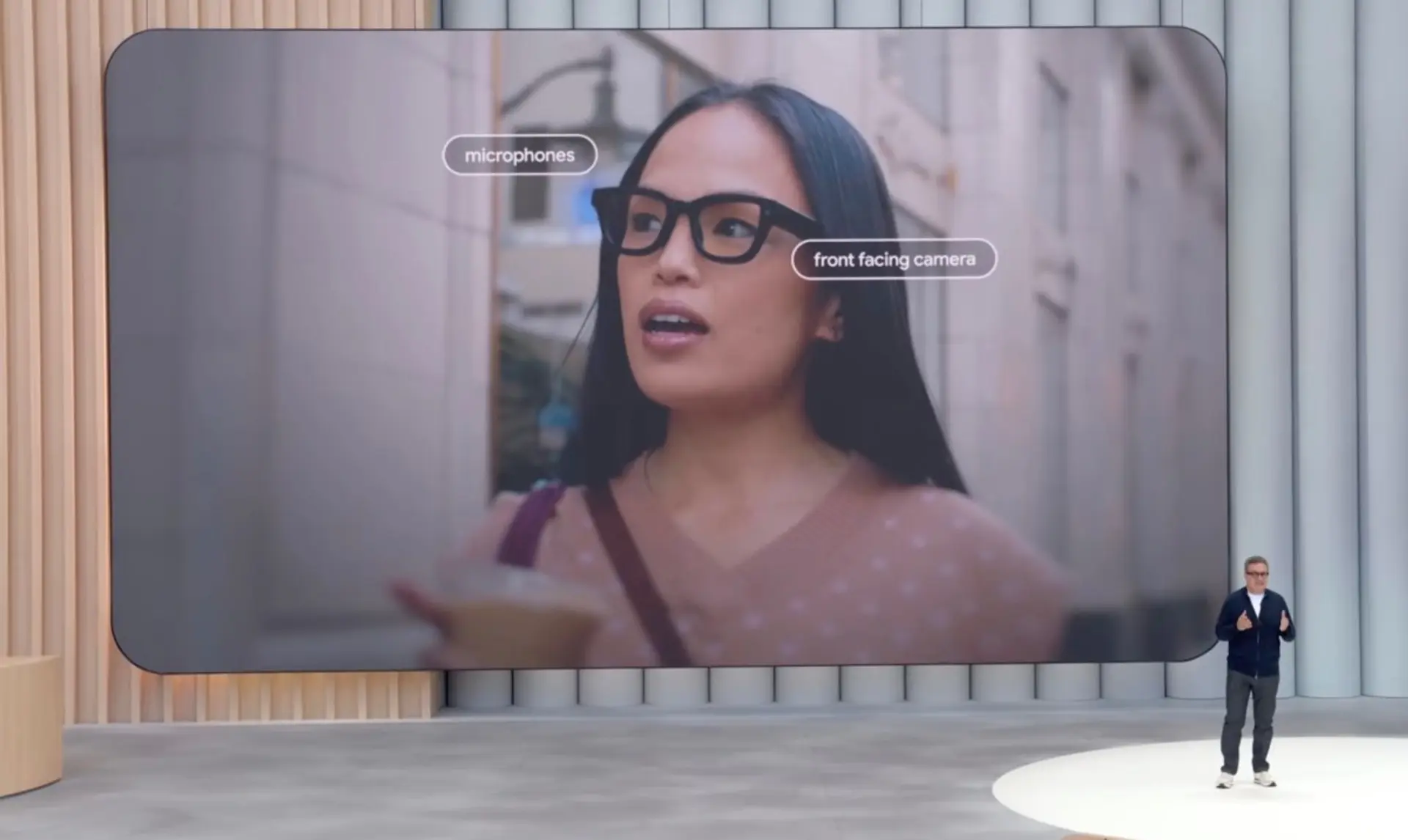

Google introduced two AI-powered smart glasses models, launching in 2026, running on Android XR. The screen-free assistance model integrates speakers, microphones, and cameras, enabling Gemini AI interaction, photo capture, and contextual assistance without a display. The display AI glasses add an in-lens display for private information, such as navigation or live translation captions. These devices, developed with Samsung, Gentle Monster, and Warby Parker, offer hands-free AI assistance for tasks including memory recall and language translation.

Why it matters

The introduction of screen-free AI glasses with integrated cameras and microphones, and display AI glasses with private in-lens information, creates a significant visibility gap for data capture within physical operational environments. This increases exposure for IT security and compliance teams to less explicit recording activity, raising due diligence requirements for monitoring and managing data ingress and egress. The absence of a visible display or external indicator for active recording weakens existing controls designed to ensure explicit consent and data handling transparency.

Related Articles

Meta Acquires Limitless AI

Read more about Meta Acquires Limitless AI →

Nvidia Faces AI Chip Challenge

Read more about Nvidia Faces AI Chip Challenge →

Google Challenges Nvidia's AI Dominance

Read more about Google Challenges Nvidia's AI Dominance →

India: AI Royalty Proposal

Read more about India: AI Royalty Proposal →