What happened

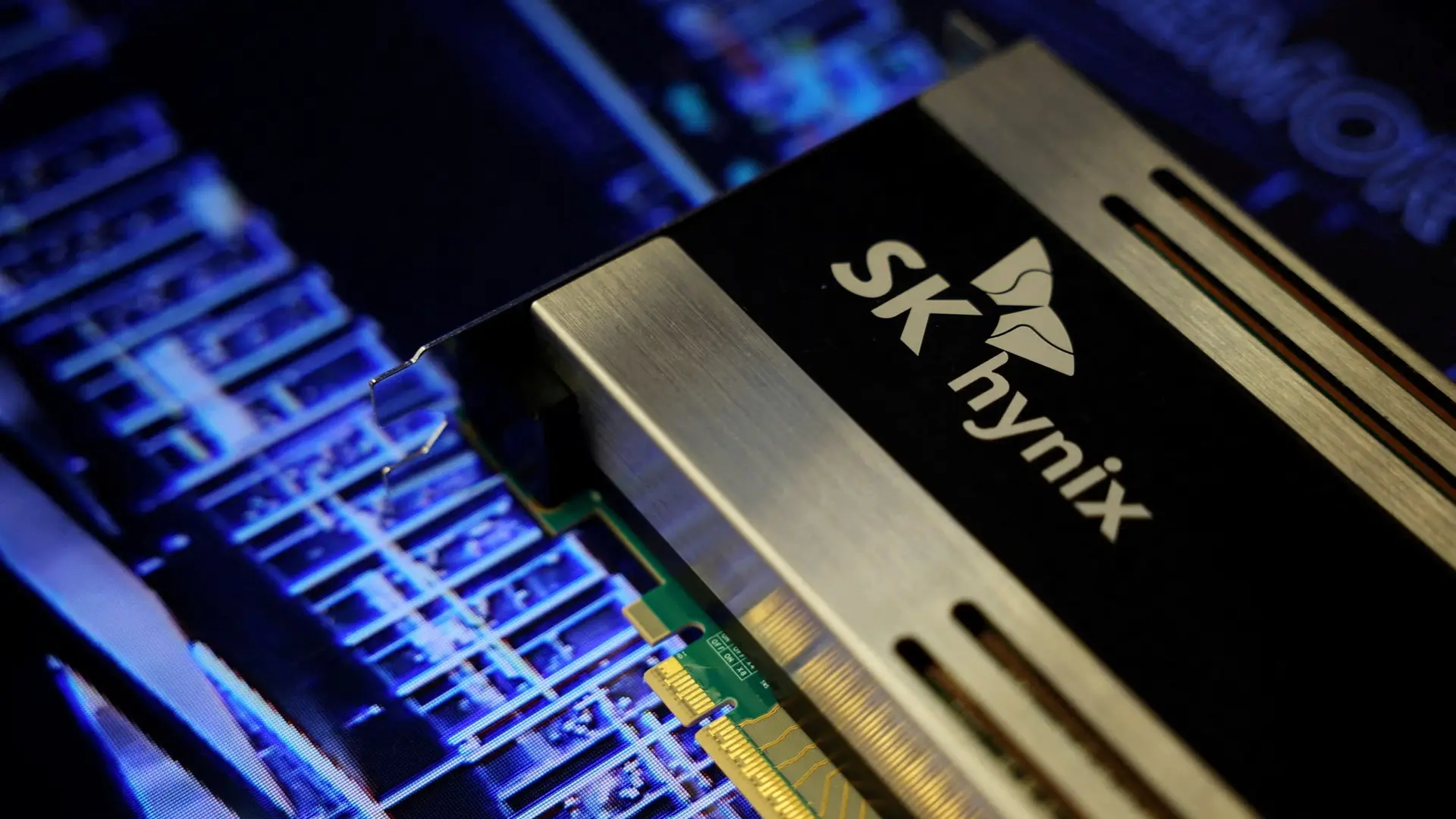

Meta is evaluating the integration of Google's custom-built Tensor Processing Units (TPUs) into its data centres by 2027, alongside potential chip rentals from Google Cloud in 2025. This action introduces an alternative to Nvidia's dominant AI chip offerings, which Nvidia asserts provide superior performance, versatility, and fungibility compared to ASICs like TPUs, designed for specific AI frameworks. This development indicates a diversification in AI accelerator procurement strategies within the market.

Why it matters

The potential adoption of Google's TPUs by Meta introduces a new operational constraint for organisations reliant on a singular AI hardware ecosystem. This diversification increases the due diligence requirements for procurement and IT infrastructure teams, who must now evaluate and integrate disparate hardware architectures and their associated software frameworks. It also creates a potential policy mismatch for platform operators regarding standardisation, increasing exposure to varied performance characteristics and vendor-specific optimisations across AI workloads.