What happened

OpenAI responded to a wrongful death lawsuit concerning a 16-year-old's suicide, denying responsibility. The company asserts the user intentionally bypassed ChatGPT's built-in safety protocols, which are designed to prevent the AI from generating harmful or dangerous information, particularly regarding self-harm. This defence posits that the user actively circumvented established safeguards.

Why it matters

This development introduces a constraint where user intent and technical proficiency can reduce the effectiveness of platform-level safety controls. It increases exposure for platform operators and IT security teams to scenarios where users actively circumvent protective measures. This raises due diligence requirements for monitoring and enforcing safety protocols, as the mechanism of user circumvention creates a visibility gap regarding the actual application of safety features.

Related Articles

OpenAI Faces Suicide Lawsuits

Read more about OpenAI Faces Suicide Lawsuits →

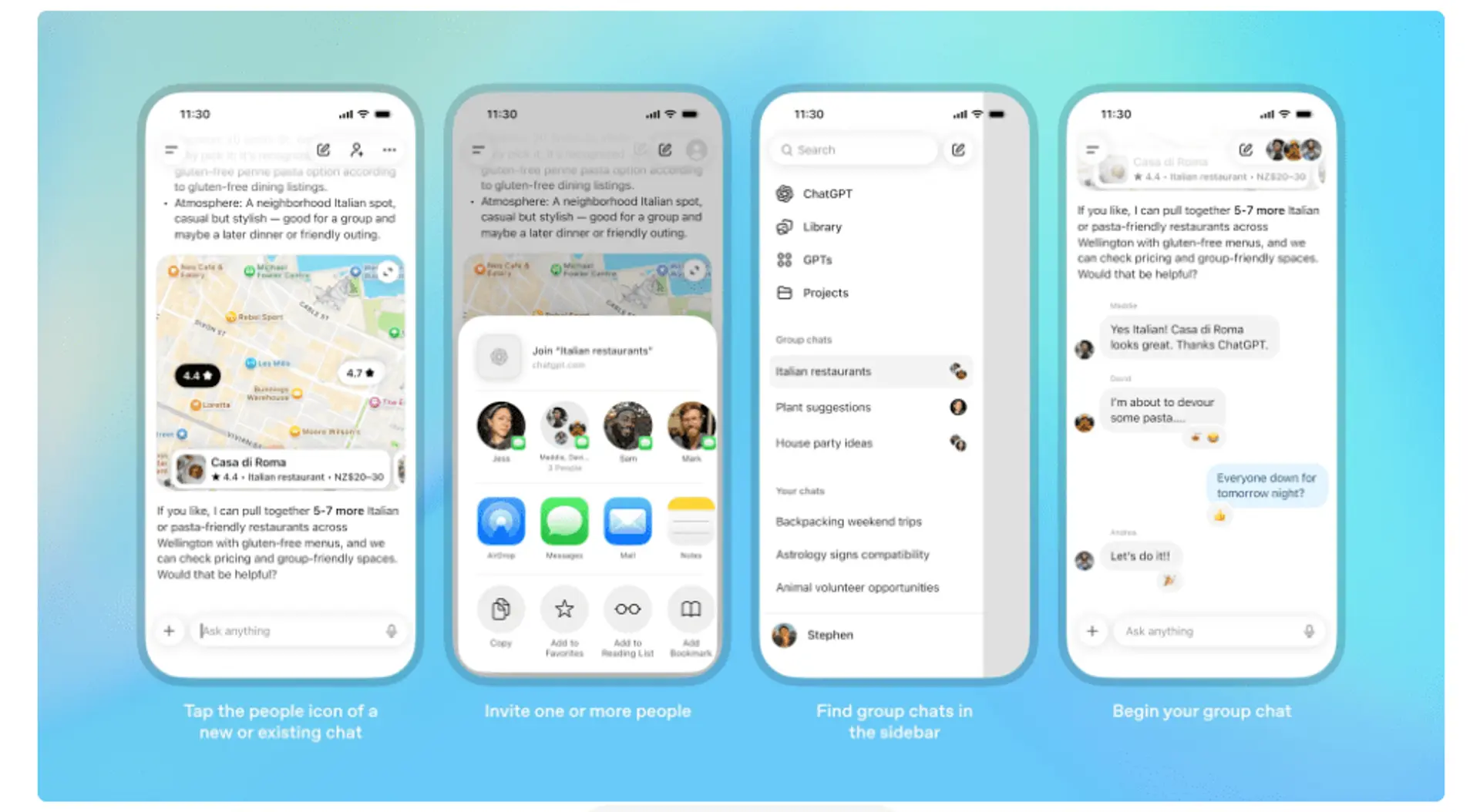

ChatGPT Enables Group Collaboration

Read more about ChatGPT Enables Group Collaboration →

Intuit Integrates OpenAI for Finance

Read more about Intuit Integrates OpenAI for Finance →

ChatGPT Enables Group Collaboration

Read more about ChatGPT Enables Group Collaboration →