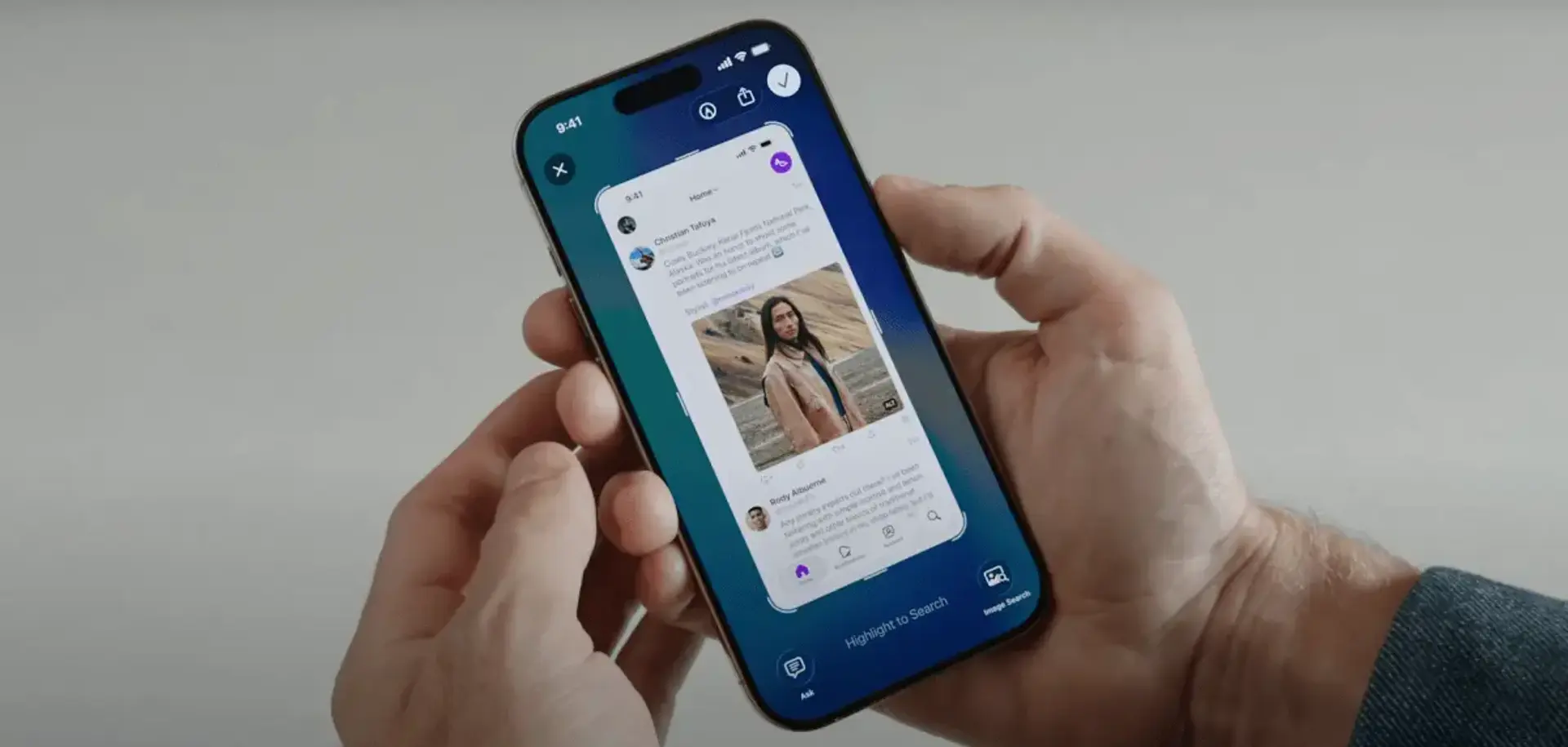

Apple is bringing its AI-powered Visual Intelligence to the iPhone screen with iOS 26. This enhancement allows users to interact with on-screen content in new ways, such as searching for similar items on Google or Etsy, or adding detected events directly to their calendar. Visual Intelligence also integrates with ChatGPT, enabling users to ask questions about what they are viewing.

This update is part of a broader push to integrate Apple Intelligence more deeply into the iOS ecosystem. The Foundation Models framework will allow developers to tap into on-device AI processing for their apps, offering capabilities like live translation in Messages, FaceTime, and Phone. The update also includes improvements to Genmoji and Image Playground, providing users with more creative options for self-expression.

iOS 26 will be compatible with iPhone 11 and later models, while Apple Intelligence features will require newer devices, including the iPhone 16 series, iPhone 15 Pro, and iPad/Mac models with M1 chips or later.