What happened

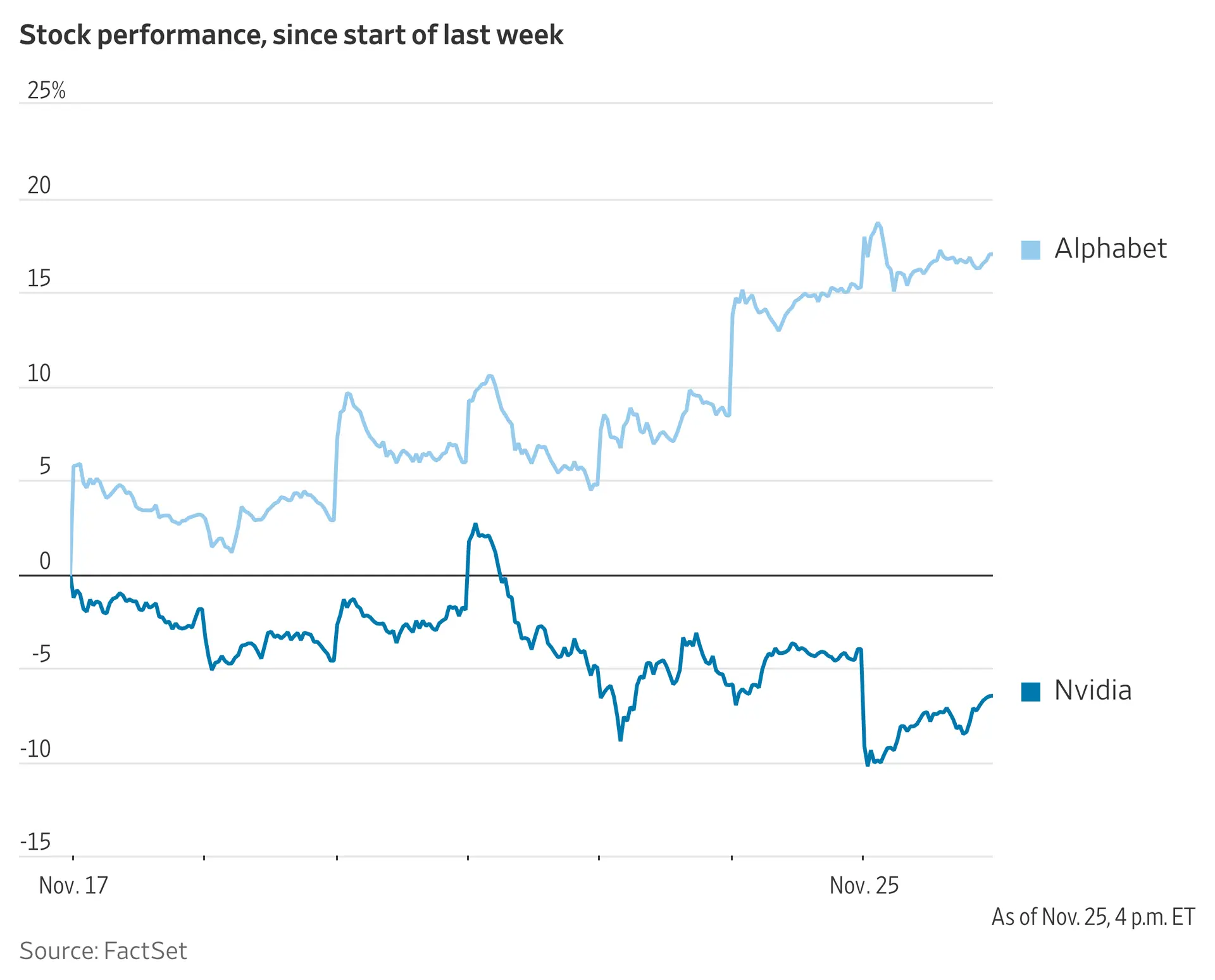

Google is now directly promoting its Tensor Processing Units (TPUs) to major tech and financial firms for data centre integration, with Meta reportedly considering a multi-billion investment in TPUs by 2027. Concurrently, AMD has introduced new chips, claimed by CEO Lisa Su to surpass Nvidia's in efficiency, and offers its ROCm platform as a non-proprietary, open-stack alternative to Nvidia's CUDA, potentially reducing costs. This occurs as Nvidia prepares its next-gen Rubin platform for mass production by 2026, within an AI chip market projected to reach $500 billion by 2028.

Why it matters

The introduction of Google's TPUs and AMD's ROCm open-stack platform introduces a new constraint on vendor selection and ecosystem dependency for AI infrastructure. This reduces the control previously exerted by a single dominant vendor's proprietary software and hardware stack. Procurement teams face increased due diligence requirements for evaluating alternative solutions and their integration complexities. Platform operators and IT architecture teams bear the burden of assessing multi-vendor strategies and the associated operational overheads, increasing exposure to potential integration challenges and support fragmentation.

Related Articles

Meta Eyes Google AI Chips

Read more about Meta Eyes Google AI Chips →

Google Challenges Nvidia's AI Dominance

Read more about Google Challenges Nvidia's AI Dominance →

Nvidia Faces AI Chip Challenge

Read more about Nvidia Faces AI Chip Challenge →

Google Challenges Nvidia's AI Dominance

Read more about Google Challenges Nvidia's AI Dominance →