What happened

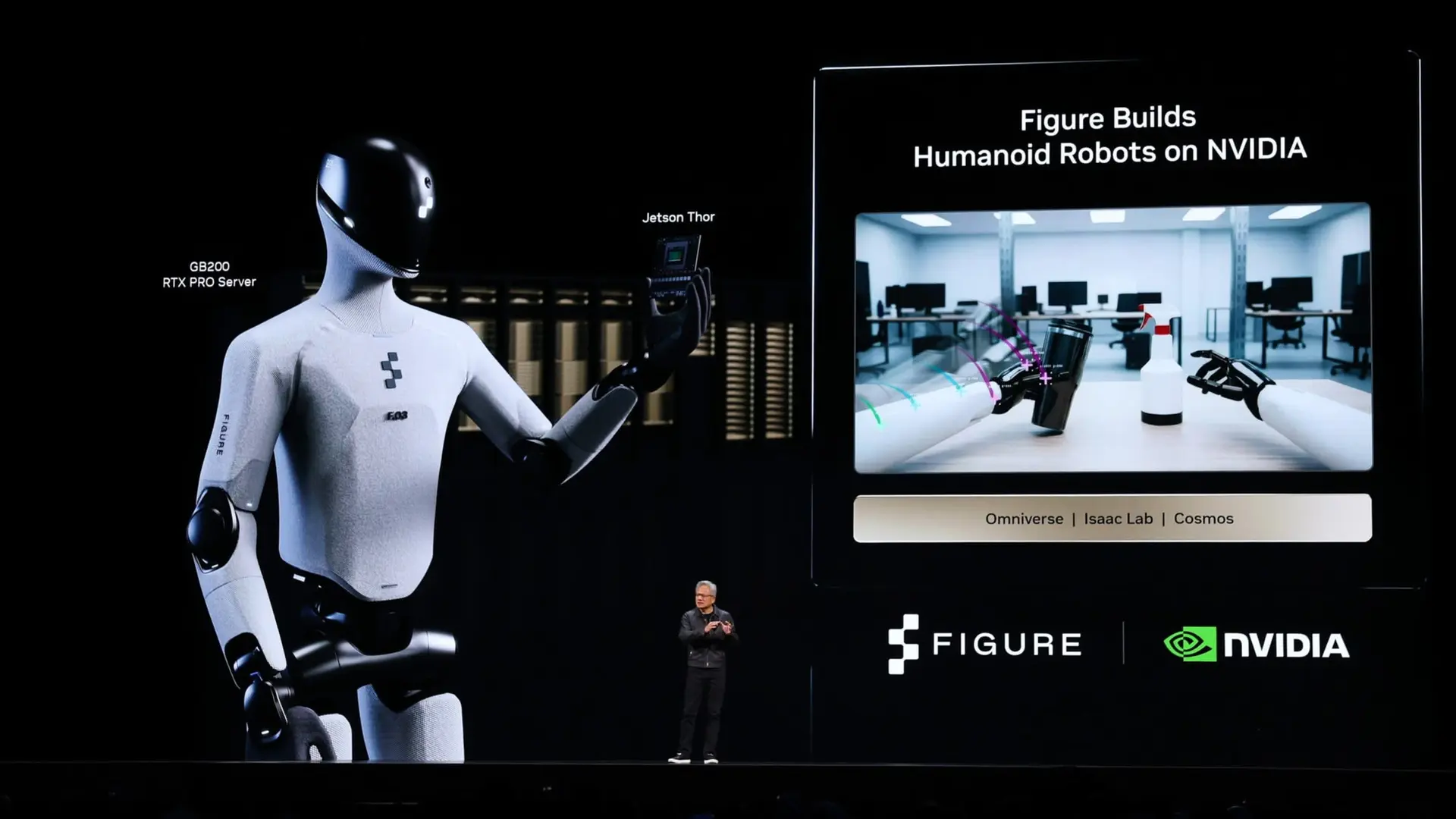

Agentic AI, an autonomous and context-aware system, now independently manages complex tasks, learns from outcomes, and dynamically adjusts strategies based on sensory input. This technology enhances threat detection, proactively manages vulnerabilities, and automates responses for defence. Concurrently, malicious actors can leverage agentic AI to automate attacks, potentially overwhelming traditional defence mechanisms. Organisations are now required to implement robust testing and runtime controls to manage these AI agents.

Why it matters

The introduction of autonomous agentic AI systems reduces the efficacy of traditional, less adaptive defence mechanisms, creating a visibility gap in detecting sophisticated, automated attacks. This increases the oversight burden on IT security and platform operators, who must now implement robust testing and runtime controls to ensure AI agent behaviour, raising due diligence requirements for managing both defensive and offensive AI deployments.