AI-generated code introduces a new vulnerability: 'slopsquatting' attacks on the software supply chain. This occurs when large language models (LLMs) hallucinate non-existent software packages. Attackers can then create malicious packages with those names, tricking developers into downloading them. A study showed that LLMs frequently suggest packages that don't exist, with a significant percentage being consistently repeated. This consistency makes 'slopsquatting' a viable attack method, as attackers can easily identify and exploit commonly hallucinated package names. While no attacks have been reported yet, security experts warn that this is a predictable and easily weaponized attack surface. The risk is amplified by the increasing reliance on AI code generation and the inherent difficulty in verifying the safety of open-source components. Lowering AI temperature settings and testing code in isolated environments can mitigate the risk.

Related Articles

Battlefield AI's complex implications

Read more about Battlefield AI's complex implications →

Chrome attracts potential buyers

Read more about Chrome attracts potential buyers →

DeepSeek AI: Security Concerns Arise

Read more about DeepSeek AI: Security Concerns Arise →

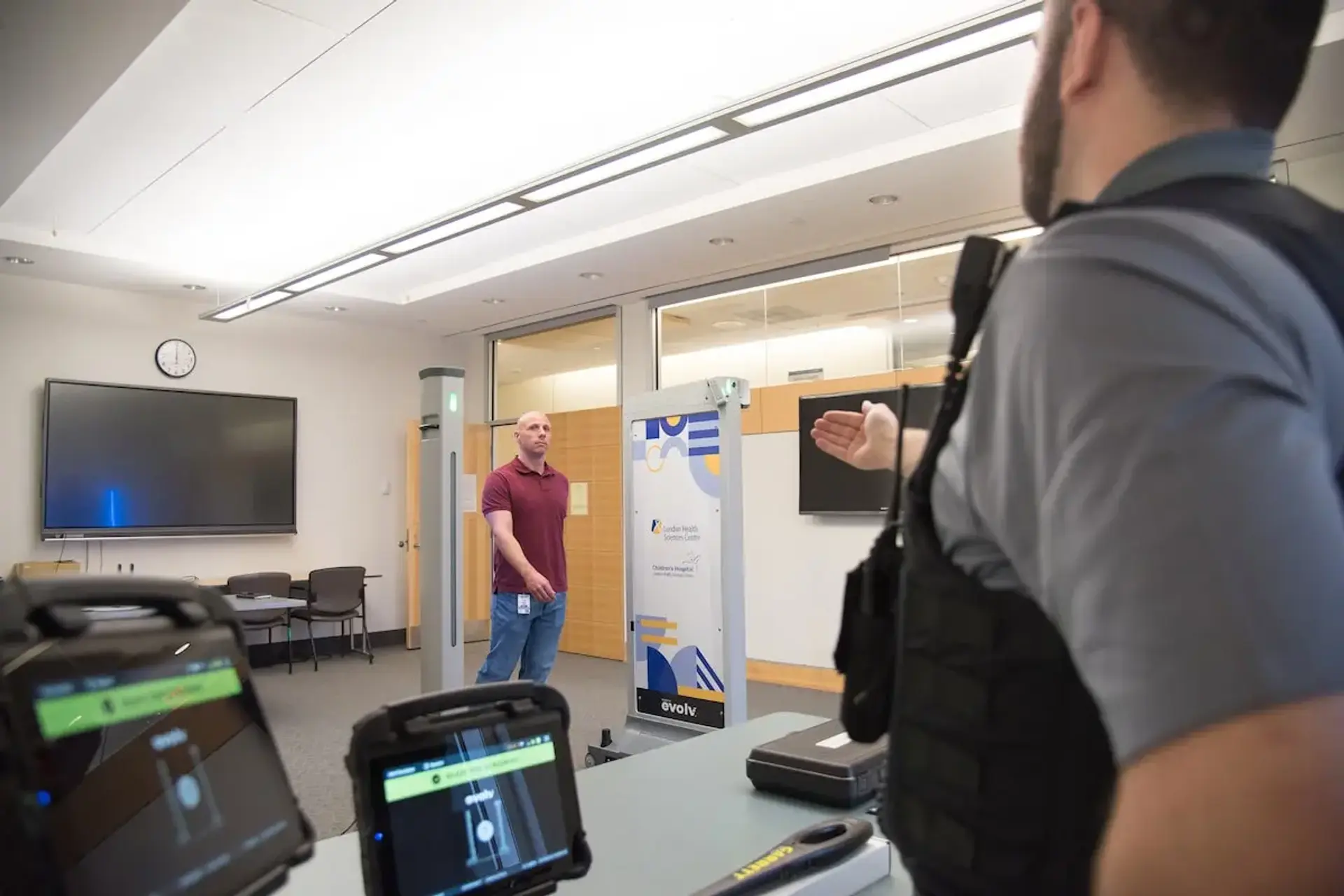

AI Weapons Detection Deployed

Read more about AI Weapons Detection Deployed →