A developer has created a 'free speech eval' to test how AI chatbots, such as OpenAI's ChatGPT, respond to controversial topics. The test aims to benchmark the degree to which these AI models are willing to engage with and discuss sensitive subjects. This evaluation could highlight biases or limitations in the AI's programming, revealing how different chatbots balance free expression with content moderation policies.

The 'free speech eval' may have implications for the development and deployment of AI chatbots. Understanding how these models handle controversial topics is crucial for ensuring they are used responsibly and ethically. The results of such tests could influence future design choices, potentially leading to more open or more restricted AI communication, depending on the priorities of developers and stakeholders.

Ultimately, this benchmark serves as a tool for assessing the alignment of AI behaviour with societal values around free speech and responsible AI use. As AI chatbots become more integrated into daily life, understanding their capacity to navigate complex and controversial discussions is increasingly important.

Related Articles

Copilot Studio Gains Autonomy

Read more about Copilot Studio Gains Autonomy →

Google Suspends Millions of Accounts

Read more about Google Suspends Millions of Accounts →

Opera Mini gets Aria AI

Read more about Opera Mini gets Aria AI →

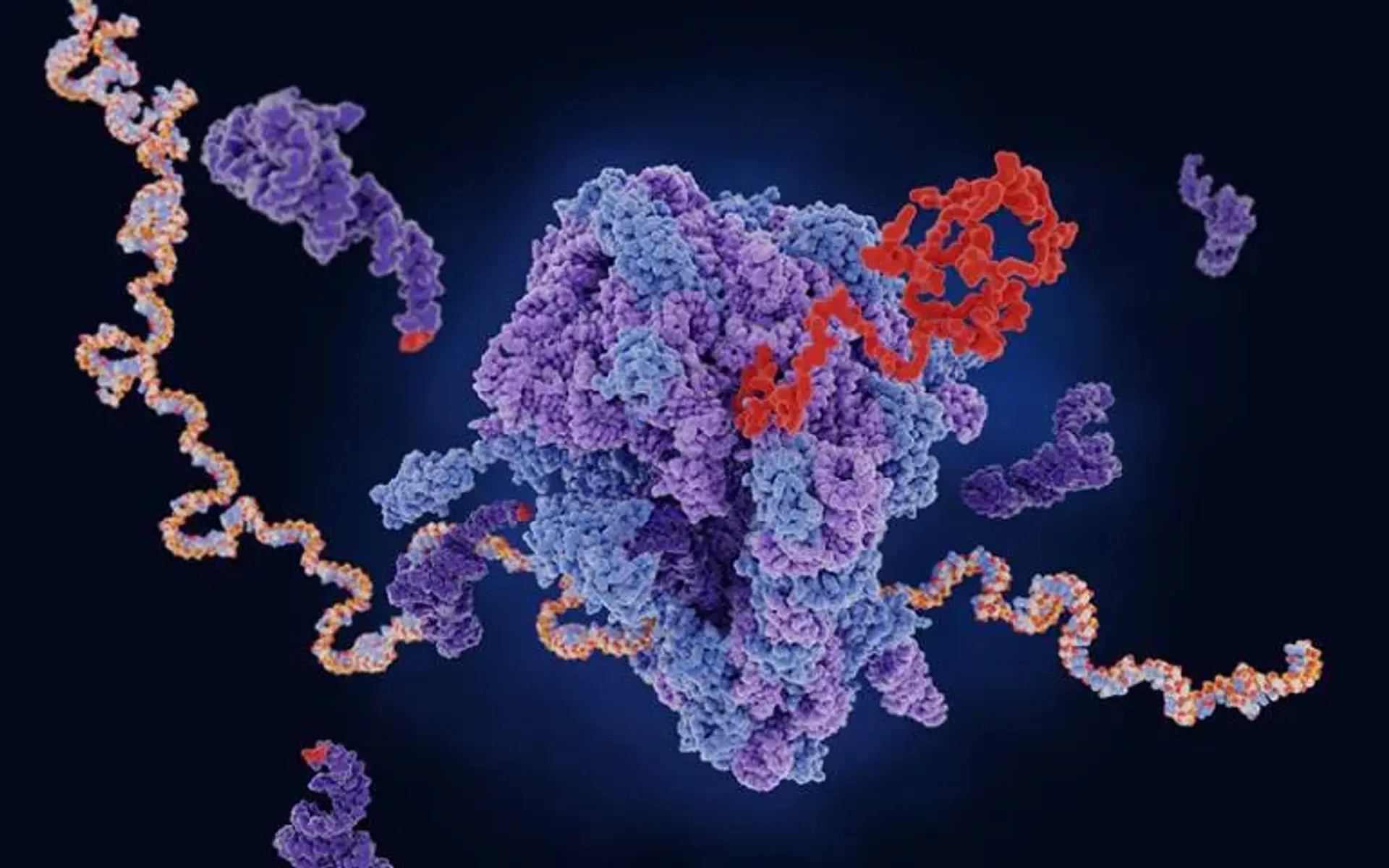

AI Maps Protein Misfolding

Read more about AI Maps Protein Misfolding →