What happened

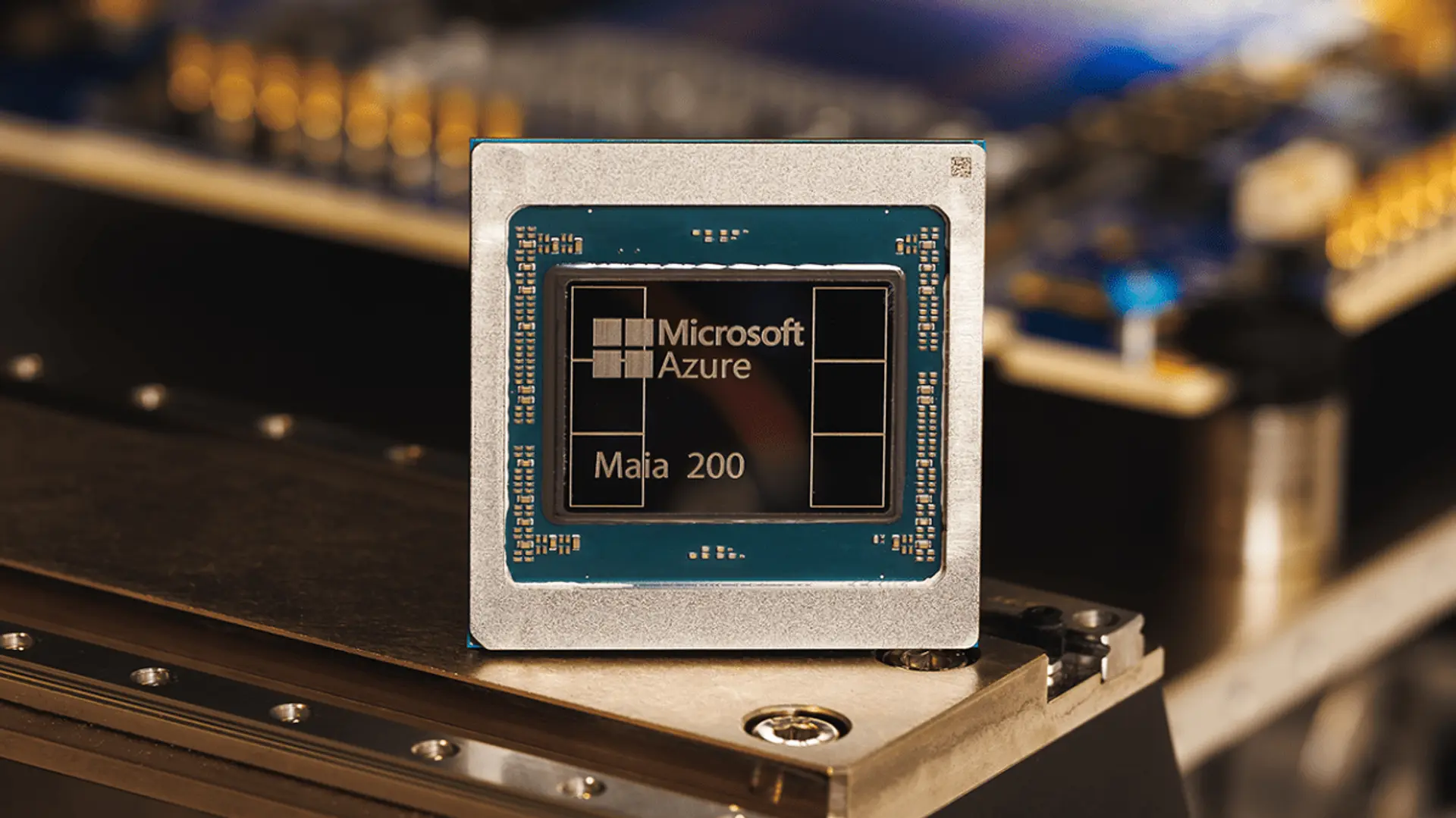

Microsoft introduced the Maia chip, equipped with over 100 billion transistors, delivering over 10 petaflops in 4-bit precision and approximately 5 petaflops of 8-bit performance. This new hardware significantly expands Microsoft's internal AI inference capability, establishing a higher performance ceiling for its proprietary AI workloads compared to previous generations, thereby altering the baseline for in-house AI compute.

Why it matters

The introduction of this high-performance, proprietary AI inference hardware tightens dependency on Microsoft's internal hardware ecosystem for specific AI workloads. This increases the oversight burden for platform operators and IT procurement, who must now manage the integration and lifecycle of this specialised, non-standardised compute infrastructure. It also reduces visibility for external hardware supply chain management.

Related Articles

OpenAI's Payments to Microsoft Exposed

Read more about OpenAI's Payments to Microsoft Exposed →

Anthropic India Leadership Appointment

Read more about Anthropic India Leadership Appointment →

Wikimedia AI Content Access Expansion

Read more about Wikimedia AI Content Access Expansion →

Microsoft Data Centre Energy Pledge

Read more about Microsoft Data Centre Energy Pledge →