DeepSeek has launched its V3.1 AI model, a challenger to OpenAI's GPT-5, emphasising advanced capabilities and cost-effectiveness. The model integrates both reasoning and conversational skills, marking a significant advancement in AI architecture. DeepSeek V3.1 supports a 128k token context window and incorporates a hybrid reasoning mode, allowing it to switch between 'thinking' and 'non-thinking' approaches via its chat template.

The V3.1 model features 671B parameters with 37B active per token, utilising a Mixture-of-Experts design to reduce inference costs. It has been optimised for tool use and agent tasks, supporting custom code and search agents. DeepSeek's models are gaining recognition for delivering performance comparable to OpenAI and Anthropic at a lower cost. The model is trained using the UE8M0 FP8 scale data format to ensure compatibility with microscaling data formats.

DeepSeek V3.1 demonstrates strong performance in coding, mathematics and general knowledge, positioning it as a practical choice for both research and applied AI development. It excels in code generation, debugging and refactoring, making it suitable for enterprise applications. The model is available on Hugging Face, promoting open-source AI development.

Related Articles

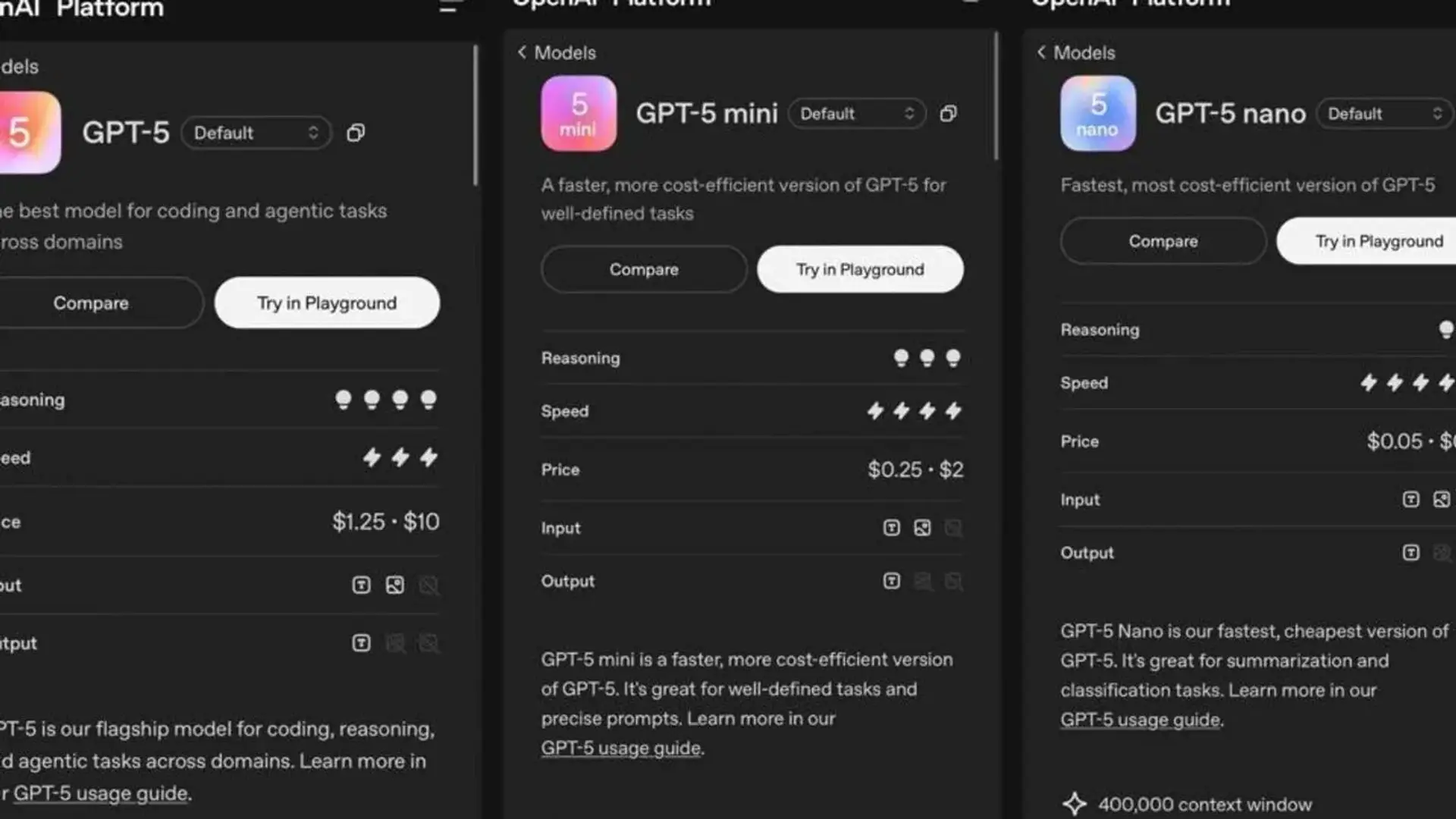

OpenAI unveils GPT-5 Enterprise

Read more about OpenAI unveils GPT-5 Enterprise →

GPT-5: Incremental AI Advance

Read more about GPT-5: Incremental AI Advance →

OpenAI Launches GPT-5 Model

Read more about OpenAI Launches GPT-5 Model →

GPT-5 Model Unveiled by OpenAI

Read more about GPT-5 Model Unveiled by OpenAI →